Taesun Yeom

I'm a second-year M.S.-Ph.D. student at EffL (Efficient Learning Lab), POSTECH (advisor: Prof. Jaeho Lee).

For my research, I primarily focus on understanding various phenomena that arise in deep neural networks from a theoretical perspective.

Email / GitHub / Google Scholar / LinkedIn / CV |

Publications |

|

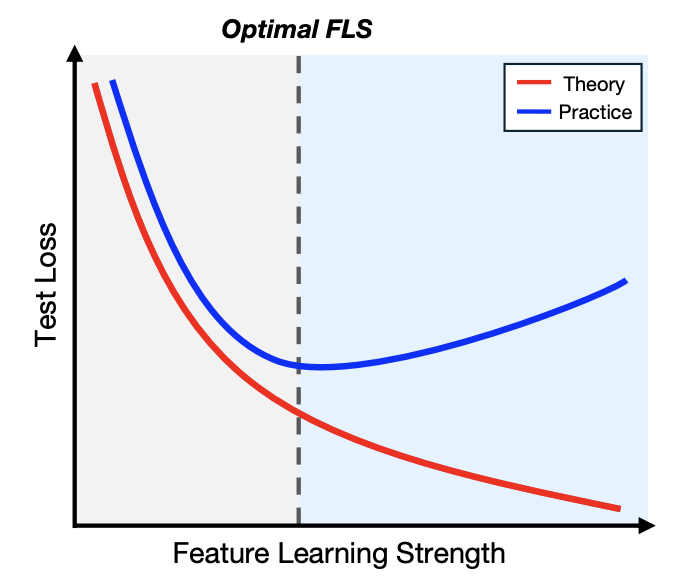

Over-Alignment vs Over-Fitting: The Role of Feature Learning Strength in GeneralizationTaesun Yeom, Taehyeok Ha, and Jaeho Lee Under review, 2026 arxiv We establish the existence of an optimal feature learning strength in classification and provide a theoretical explanation. |

|

Activation Quantization of Vision Encoders Needs Prefixing RegistersSeunghyeon Kim, Jinho Kim, Taesun Yeom, Wonpyo Park, Kyuyeun Kim, and Jaeho Lee Under review, 2025 arxiv We propose simple post-training quantization (PTQ) methods for ViTs that can be applied on top of existing approaches. |

|

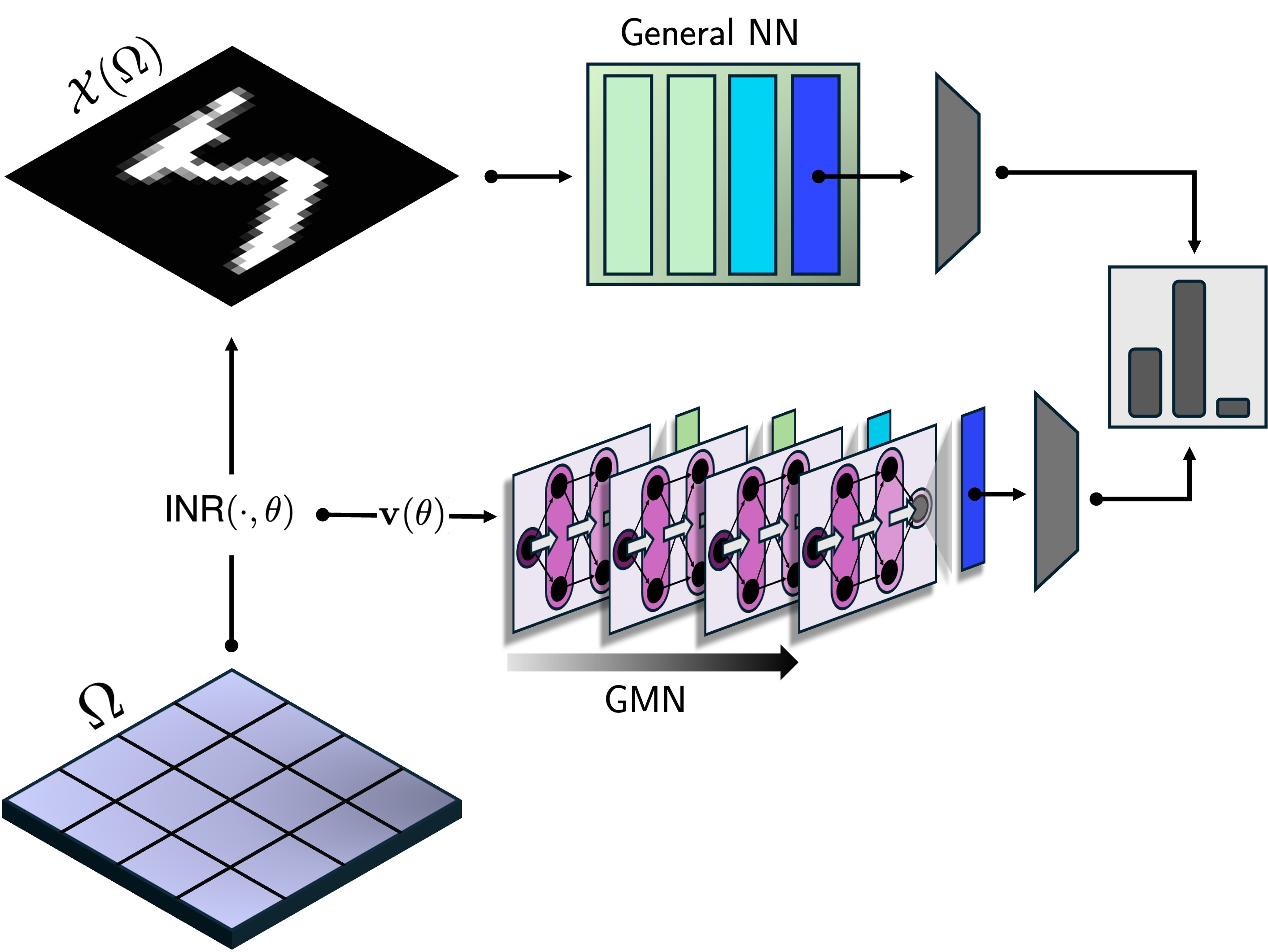

On the Internal Representations of Graph MetanetworksTaesun Yeom and Jaeho Lee ICLR Workshop on Weight Space Learning, 2025 arxiv / openreview What do graph metanetworks learn? |

|

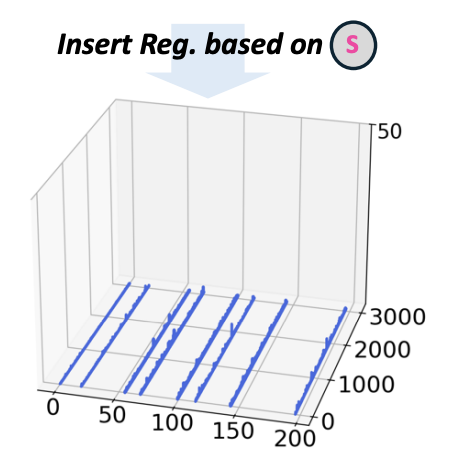

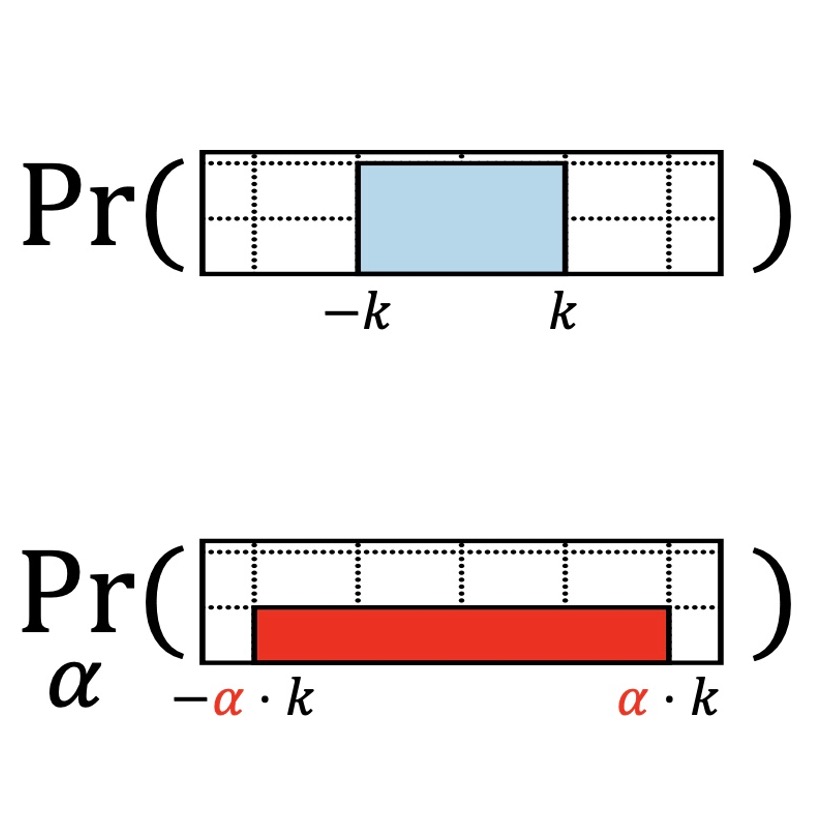

Fast Training of Sinusoidal Neural Fields via Scaling InitializationTaesun Yeom*, Sangyoon Lee*, and Jaeho Lee International Conference on Learning Representations (ICLR), 2025 arxiv / code / openreview We propose a simple yet effective method to accelerate neural field training. |

|

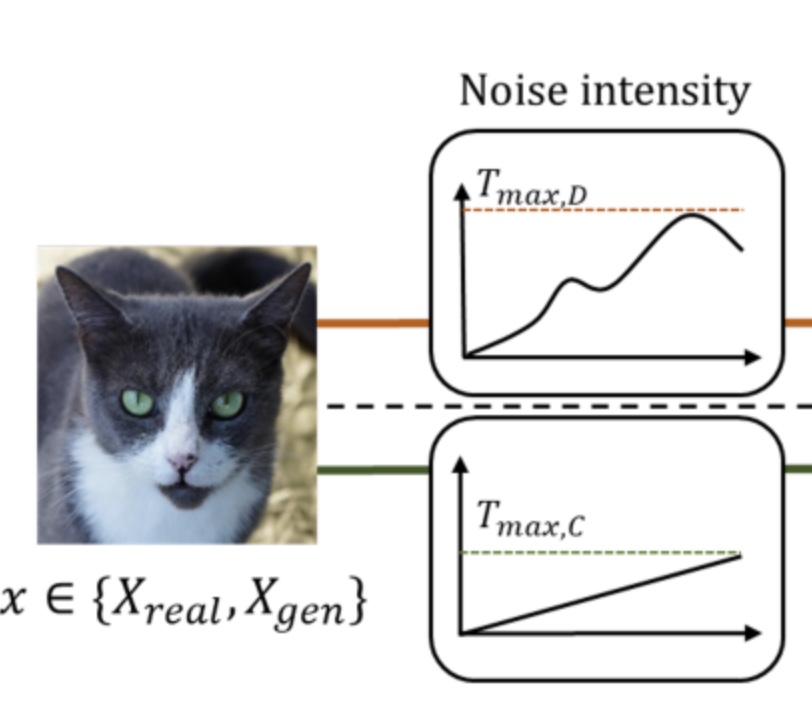

DuDGAN: Improving Class-Conditional GANs via Dual-DiffusionTaesun Yeom, Chanhoe Gu, and Minhyeok Lee IEEE Access, 2024 paper / code We propose a dual-diffusion process to mitigate overfitting in class-conditional GANs. |

|

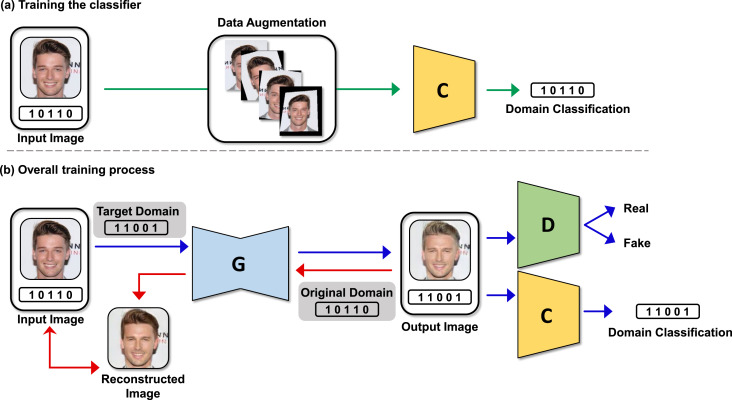

Superstargan: Generative adversarial networks for image-to-image translation in large-scale domainsKanghyeok Ko, Taesun Yeom, and Minhyeok Lee Neural Networks, 2023 paper / code This work enables GANs for image-to-image translation across large-scale domains. |

Education |

|

M.S.–Ph.D. in Artificial Intelligence Pohang University of Science and Technology (POSTECH), South Korea |

2024.09 – Present |

|

B.S. in Mechanical Engineering Chung-Ang University, South Korea |

2018.03 – 2024.08 |

|

Design and source code from Jon Barron's website |